A DEVASTATED dad has told how his murdered daughter was turned into an AI chatbot by a sick stranger.

Drew Crecente’s daughter Jennifer was 18 when she was killed by her ex-boyfriend Justin Crabbe.

Crecente/Richeson familyJennifer Anne Crecente with her father Drew on Christmas Eve in 2002[/caption]

Jennifers Hope OrganisationJennifer’s yearbook photo was used to create a chatbot on Character AI nearly two decades after she was killed[/caption]

Jennifers Hope OrganisationJennifer’s family described her as a ‘unique young woman who was fiercely protective of her friends’[/caption]

Jennifer in Mazatlan, Mexico in June 2003 while on a family vacationCrecente/Richeson family

Crabbe was jailed for 35 years after shooting Jennifer in the back of the head with a sawn-off shotgun in woods near her home in 2006 – months before she was set to graduate from high school.

Now, nearly two decades after her brutal murder, Drew is fighting for justice for his daughter for a second time.

Last month, the dad, from Austin, Texas, made a horrifying discovery online after receiving a Google alert.

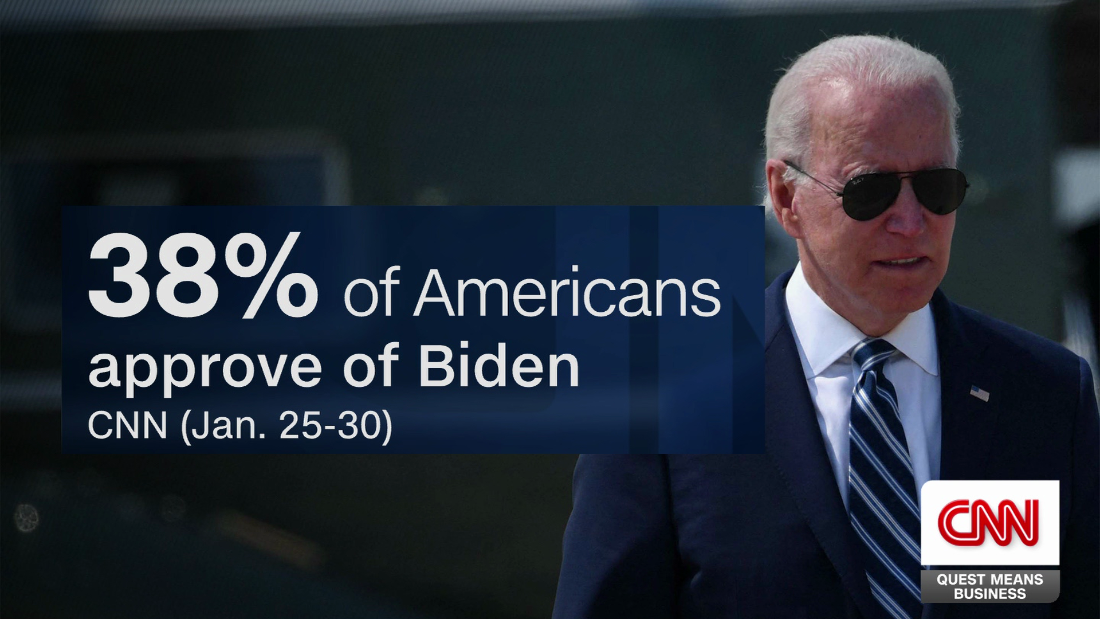

Drew found his daughter’s name and yearbook photo had been used to create an AI chatbot – allowing users to chat with her.

He said initial confusion turned into “dread and anger” after realising his beloved daughter’s identity was being used.

Drew blasted the creation on the website Character AI – which is one of the biggest platforms of its kind – as “unacceptable”.

Speaking exclusively to The Sun, he said he is considering legal action against the company after he was “re-traumatised”.

He said: “It wasn’t something that I was behind or anybody that would love and care about Jennifer that was behind creating this.

“Jennifer was a wonderful person. She’s missed every day and she’s my only child. I did not lose a child, I also lost my identity as a parent.”

Drew is now facing the “unnecessarily painful situation” of trying to protect his late daughter from being exploited online.

Character AI told The Sun that the chatbot was “user-created” and was “removed as soon as we were notified about this Character”.

As Drew grapples with why someone would turn Jennifer into a chatbot, he fears that it could have been done by “an unhealthy person”.

Perhaps someone “who spends a lot of time very focused on people who are deceased, especially people who have been murdered”, he said.

To rub salt into the wound, when Drew logged a complaint to remove of the bot and ensure it could not be recreated, he received a “tone-deaf” response.

Two weeks after filing a complaint titled, “Murdered child recreated on Character AI,” he was thanked for bringing the issue to their attention and told that the team would “look into it further.”

“We appreciate you making our platform safe for everyone, Cheers!” the company’s message ended.

“That cheers with the exclamation mark after it I think tells me all I need to know about Character AI. This response was incredibly tone deaf – shockingly so,” Drew said.

Jennifers Hope OrganisationJennifer was shot dead by her ex-boyfriend – who was jailed for 35 years[/caption]

Jennifers Hope OrganisationJennifer’s family described her as ‘loving, funny and individualistic’[/caption]

Jennifers Hope OrganisationJennifer and her mum Elizabeth pictured at a Thanksgiving dinner[/caption]

Crecente/Richeson familyThe last time Jennifer saw her grandparents was in November 2005, three months before she was killed[/caption]

“It’s upsetting and it’s tempting to let the anger take over… the anger is empowering and the rest of it, being sad and behind upset and re-traumatised is exhausting.”

Drew also received a message from the company’s interim CEO two days after he logged the original complaint that was picked up by the press.

“It wasn’t a real apology,” Drew said.

“He offered his condolences on the tragic loss of my daughter.

“He also apologised for the upsetting reminder this week, not saying that they were responsible, but he was sorry that I was reminded of my daughter’s passing.”

The CEO told the grief-stricken father that the bot was not created by the platform itself but by one of its users and it was immediately removed when the issue came to light.

Drew has not heard more from Character AI – but an attempt by The Sun to create a bot in Jennifer’s name showed it was still possible.

This remains true after Character AI told The Sun that her “name was added to our block list”.

“It’s upsetting to know that it can still be done, but at the same time, it’s helpful because it just highlights the need for me to continue to explore this fully,” Drew said.

The platform maintains that it “takes safety seriously,” and aims “to provide a creative space that is engaging, immersive, and safe”.

What is Character AI?

“Chat with anyone, anywhere, anytime. Experience the power of super-intelligent chatbots that hear you [and] understand you,” the website reads.

Around 100 million chatbots have been created on the site by its 20 million active monthly users since it was launched in 2022.

These chatbots include the rich and famous like Taylor Swift, Elon Musk, and Donald Trump but also characters from fiction and fantasies like strict teachers, misbehaving students, and vampires.

Each bot is given a bio by the creator and any user can start up a chat with them.

In January, 3.5 million people were visiting the site every day.

The main demographic of users are aged between 16 and 30 years old.

After receiving $150 million in funding in March last year, the company has been valued at around $1 billion.

However, it has since been reported by The Telegraph that chatbots of two other dead teenage girls from the UK were also created on the site.

Brianna Ghey was 16-years-old when she was stabbed to death by two teenagers in 2023.

Molly Russell, 14, ended her life in November 2017 after watching self-harm and suicide content online.

A foundation set up in Russell’s memory called the chatbot “sickening” and an “utterly reprehensible failure of moderation”.

Meanwhile, Esther Ghey, Brianna’s mother, told The Telegraph that it showed how “manipulative and dangerous” the online world can be.

“It’s also a reflection of how our society regards victims of crime, especially victims of murder” which society sees as “entertainment,” Drew added.

“It shows how little time [Character AI has] spent on actually considering the potential harms of this service that they are providing to the public.”

He said such businesses have a “social responsibility” to prevent harm when creating and launching their technology.

A lawsuit against Character AI has been filed in Florida by the mother of 14-year-old Sewell Setzer III who took his life after he became obsessed with a chatbot.

The teenager allegedly spoke to the bot about death and also engaged in highly sexualised conversations while isolating himself from the real world.

Setzer shot himself just moments after the bot he allegedly fell in love with told him to “come home,” the lawsuit claims.

Character AI told The Sun it does not comment on ongoing litigation and that it is “implementing additional moderation tools to help prioritise community safety.”

Drew confirmed that it is “likely” he will also be taking legal action against the billion-dollar company.

Jennifers Hope OrganisationJennifer’s dad he believes that what happened to his daugher is just the beginning of the problem with AI regulation[/caption]

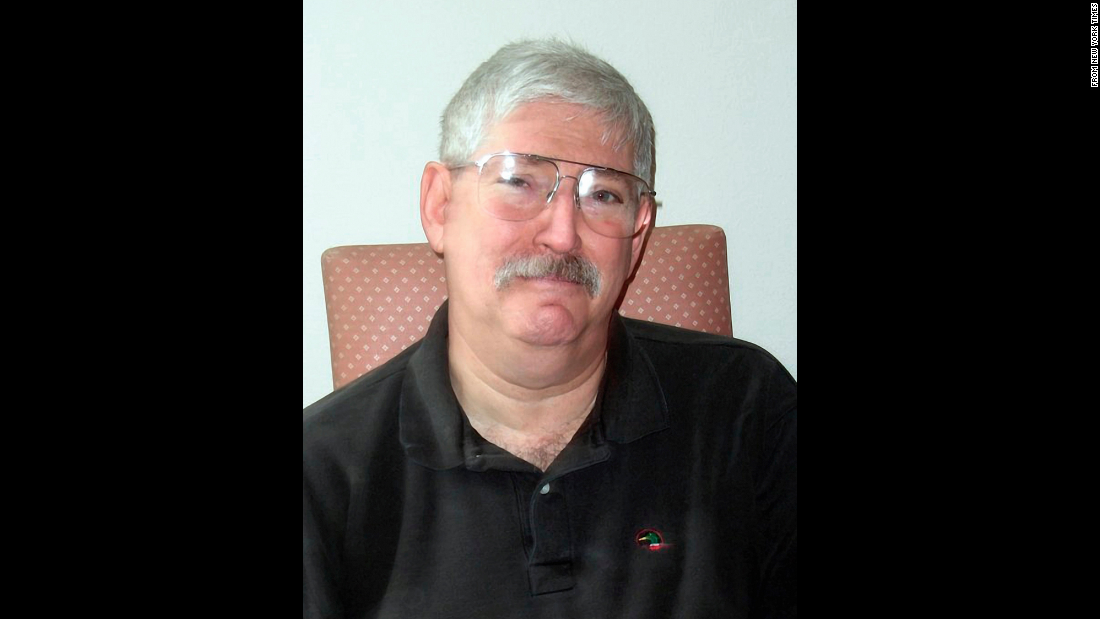

The SunDrew spoke to The Sun about the chatbot and his fears for the future[/caption]

Character AI’s Statement

The Sun received the following statement from Character AI regarding the creation of Jennifer’s chatbot and the Setzer lawsuit.

“We do not comment on pending litigation.

“The Character using Jennifer Ann’s likeness was user-created, and as soon as we were notified about this Character, we removed it and added her name to our block list.

“Character.AI takes safety on our platform seriously, and our goal is to provide a creative space that is engaging, immersive, and safe.

“We are always working toward achieving that balance, as are many companies using AI across the industry.

“Users create hundreds of thousands of new Characters on the platform every day.

“Our dedicated Trust and Safety team moderates these Characters proactively and in response to user reports, including using industry-standard and custom blocklists that we regularly expand.

“As we continue to refine our safety practices, we are implementing additional moderation tools to help prioritize community safety.”

‘WORST OF US’

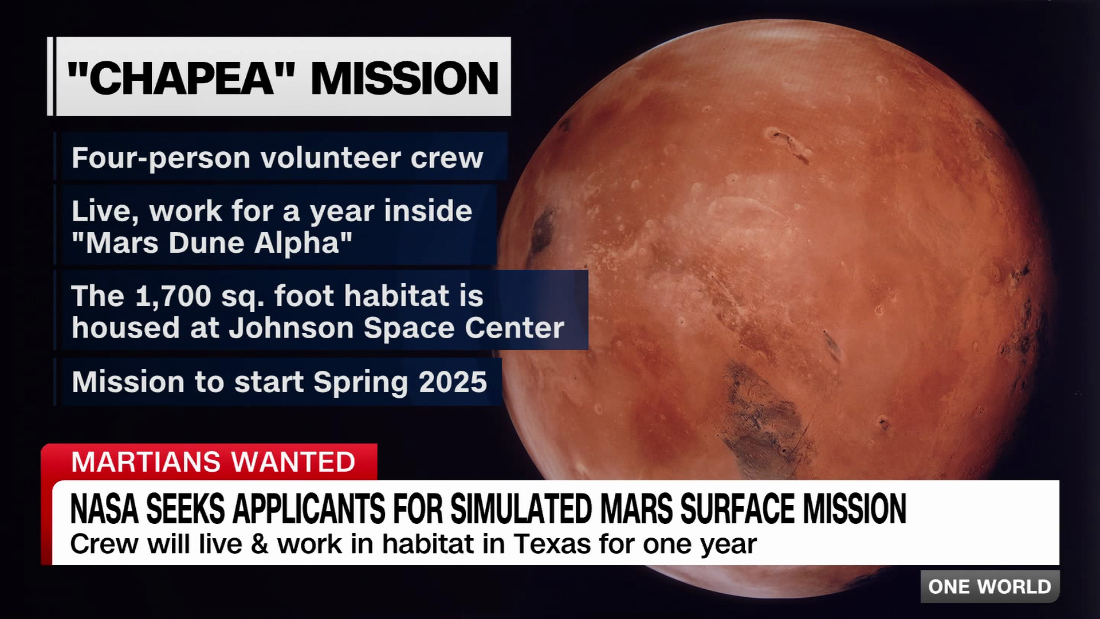

He believes that taking action now is the only way to prevent future harm, as he predicts that this issue will “increase exponentially” with “bad actors” using AI chatbots to cause harm.

“There’s no question about it,” Drew, who has a background in tech, said.

The dad is hoping swift legal action can see the laws surrounding AI change.

He noted the level of uncertainty around the programming of AI – which is less about coding and more about handing over a “huge library of data” that you want AI to learn from.

“In that library of data can be some really bad unhealthy things including material that is absolutely illegal,” he said.

“We shouldn’t be surprised when there becomes a race to the bottom through the use of AI.

“It could represent some of the best of us, but unless it’s really profitable to do that, I think it’s more likely that it will be used to represent the worst of us.”

He believes that what happened to Jennifer is just the beginning of the problem.

“We can’t wait for politicians,” Drew said.

“The reality is that public sentiment is going to be really important… to help prevent future harm.”

In considering AI and the laws surrounding it, Drew urged people to identify with Jennifer as a friend, sister, and daughter.

GettyJennifer’s father has issued a stark warning about the future dangers of AI (stock)[/caption] Published: [#item_custom_pubDate]