AT 15 years old, Jess Davies felt like her world had ended when she discovered a picture of her in her underwear was being shared around by boys at her school.

But, horrifyingly, it wouldn’t be the only time she was a victim of picture-based abuse – many years later, her boyfriend would also betray her in the same cruel way.

When Jess Davies was a teenager, a photo she shared with a boy she trusted made its way around her entire schoolRhiannon Holland/Mefus photography

The Welsh activist took a deep dive into the harrowing corners of the internet for her new bookJess Davies

Jess, from Aberystwyth, was working as a part-time model when she found naked photos – which had been taken of her when she was asleep – in a group chat on her boyfriend’s phone.

She says she quickly deleted the images and accepted it, not realising until years later that it was deeply disturbing and a criminal offence.

Now 32, the horrific experiences Jess faced led her to become a women’s rights campaigner, raising awareness of online misogyny and images being spread without consent.

She told The Sun: “I was so young and it [sharing pics] was something that had been normalised. This is just what happens. It wasn’t until I got a little bit older that I realised ‘that wasn’t right’.”

Jess, now single, also says there is “so much shame and stigma” towards female victims but that several recent shocking high-profile cases are finally beginning to shine a spotlight on the online abuse women face.

Gisele Pelicot, whose husband recruited 72 men online to rape her as she lay drugged, said during his court case, “shame needs to change sides”.

And Jess could not agree more.

‘Traded like Pokémon cards’

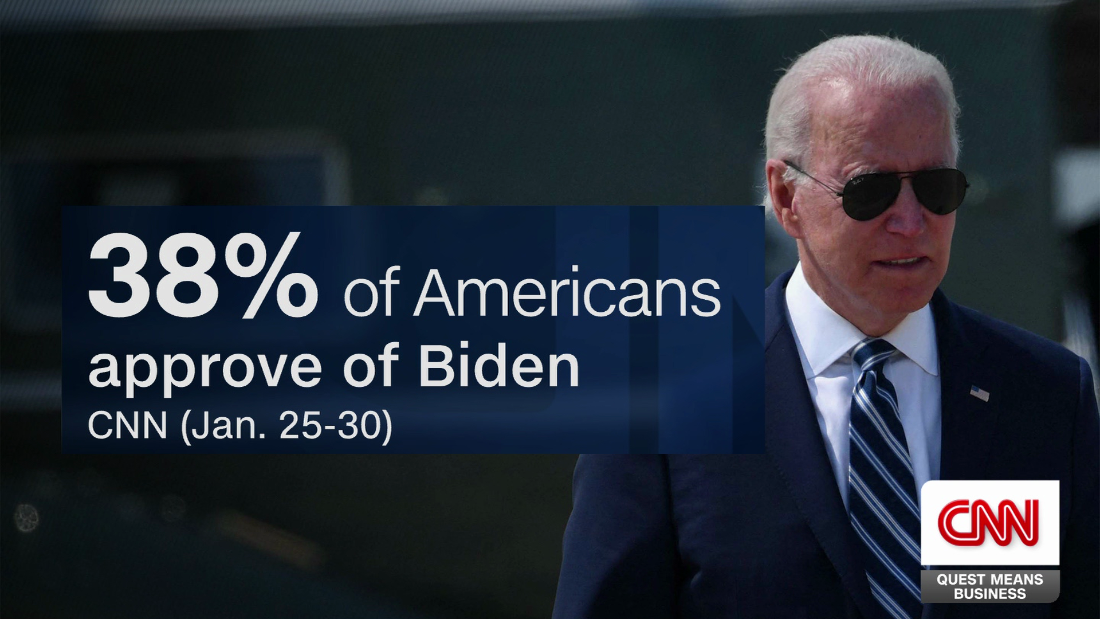

The Revenge Porn Helpline said it received 22,275 reports of image-based abuse last year, which is the highest it has ever seen.

Abuse of women online has been highlighted time and time again as it becomes disturbingly common.

Jess said most women don’t know that pictures of them are even circulating online on Reddit, Discord, Telegram and sick forums like 4chan – famous for its extreme content.

While taking a deep dive into the harrowing corners of the internet for her new book, No One Wants to See Your D*ck, she found that some sick individuals had so many nude images of women that they are divided into folders.

She says: “People’s sons, brothers and friends are trading these photos like Pokémon cards and the women in the images have no idea that someone they trust is doing this.

“I saw teachers in there, people making deepfakes of their mothers, their aunts, their sisters. It was crazy. It is happening on such a big scale. You only need one photo to be able to create an explicit deep fake.”

And no one is safe, even those who have never taken a nude picture, as recent AI development makes it easy for these sick people to “nudify” a woman.

With just a profile picture and the click of a button, AI can remove clothes from an innocent photo, make it more seductive or even swap a person’s head onto a naked body – creating incredibly realistic deepfakes.

“These nudify bots post on their sites that they’re getting millions of people using them a day.

“Millions of women don’t know that they’ve been turned into explicit deepfakes. And then it’s like, ‘How do you keep track of that? How do you report that?’

“Of course, it’s not all men. I have so many great men in my life and my family. But it’s not just a select few either.”

In 2024, nearly 4,000 celebrities were found to be victims of deepfake porn, including actresses Scarlett Johansson and Emma Watson.

Speaking about deepfake, Scarlett said: “Nothing can stop someone from cutting and pasting my image or anyone else’s onto a different body and making it look as eerily realistic as desired. The fact is that trying to protect yourself from the Internet and its depravity is basically a lost cause.”

And with AI technology advancing so rapidly, most police forces are struggling to find a way to deal with the influx of online abuse cases.

Jess DaviesJess said that most women don’t know that pictures of them are circulating online[/caption]

AFPDominique Pelicot during his trial in which he was found guilty of drugging his wife Gisele Pelicot and inviting strangers to rape her[/caption]

AFPGisele bravely waived her anonymity because ‘shame needs to change sides’[/caption]

GettyActresses like Emma Watson are frequent victims of deepfakes online[/caption]

GettyScarlett Johansson said she’s given up fighting ‘demeaning’ deepfake porn of her[/caption]

Jess says: “I think there’s just such a feeling of entitlement over women’s bodies. Most women don’t know this has happened to them.

“And yet, if you go into the forums, there are hundreds and hundreds and hundreds of women – every day you’ll find new images being posted of women who are being turned into explicit deepfakes, mostly by men and boys that they know.”

Describing what she saw while looking into these vile forums, Jess said the photos are divided into locations for men to search for specific women.

“So it’d be like North Wales girls and Cumbria, Edinburgh,” Jess adds.

“It’s like, ‘Anyone got Jess Davies from Aberystwyth’ and someone will be like, ‘Yeah, I do. I’ve got so and so I’ll trade you’.”

Others play sick games between them, such as ‘Risk’.

One will post an image online, and if another ‘catches’ them by responding within five minutes, they then have to reveal the woman’s full name and socials.

Jess has even seen her own modelling images used online for scams, porn sites, escort services and sex chats.

‘You know these men’

While this might seem like anonymous men in shadowy corners of the internet, these are people women know and likely trust.

“These are men that know women personally, because they’re men from your hometown.

“When you walk down the street or pop to the shops, or you’re at the school gates, that could be someone who’s actively trading your images without consent that you don’t know of.

It’s like Pokémon cards, right? It’s like, Oh, who have you got? I’ve got this. I’ll send that. It’s like you’ve got a sticker book that you’re all trading photos for

Jess Davies

“These are men that we know who are all doing this. And then we have to exist alongside them. It’s crazy that this is happening.

“It’s like Pokémon cards, right? It’s like, ‘Oh, who have you got? I’ve got this. I’ll send that’. It’s like you’ve got a sticker book that you’re all trading photos for. And yet it’s the women still that you’re angry at.”

Jess said whenever a survivor of this abuse speaks out, she’s immediately slammed as being “irrational”.

“Why are you more angry at women speaking up about their lived experiences than the men who are giving you a bad name?

“It’s not just some man’s behaviour living out online. It’s showing what they would do if they could be anonymous in real life.”

Jess pointed to the harrowing case of Gavin Plumb, who was jailed for trying to kidnap, rape and murder celebrity Holly Willoughby.

His sick scheme was organised on forums and not one person reported him until an undercover police officer came across the twisted plot.

PAHolly Willoughby said women should not be made to feel unsafe after security guard Gavin Plumb was found guilty of plans to kidnap, rape and murder her[/caption]

PAPlumb was also an admin of a public Kik group where its 50 members would post pictures of Holly and talk about her, sometimes in a graphic and sexual way[/caption]

PAPlumb bought hundreds of pounds worth of equipment to carry out the kidnapping[/caption]

His desires were fulled by deepfake porn shared online with others who shared his vile perversions.

Jess also pointed to the French case of Gisele Pelicot, a rape victim who waivered her anonymity to stand against her own abusive husband.

She says: “Her husband found 50 plus men to rape his unconscious wife. He found them on forums.”

‘It’s never in the past’

Jess refuses to go on dating apps and finds dating difficult because of all she has suffered.

She has seen the worst of men when venturing into shadowy corners of the internet, seeing content that would leave anyone shaken.

When Jess was a teenager, a photo she shared with a boy she trusted made its way through the entire school and the football team.

Classmates texted her saying, “nice pictures, didn’t think you were that type of girl”, mocking her as she sat in art class.

Later, when she was a student and modelling part-time, her boyfriend took a photo of her while she was sleeping naked.

Jess saw it on his phone when he was in the shower – he had posted it in a group chat with his friends.

She deleted the image that was taken without her knowledge or permission but never confronted him, thinking “that is just what happens”.

The Welsh activist said the trauma of being a victim of image-based abuse is lifelong and she still feels the impact today.

“A lot of women are suicidal when it comes to this. It’s something that they carry around with them every single day.

“One victim said to me, I can’t say I’ve got PTSD. Because it’s never in the past, because you’re always thinking, ‘Where was it shared before that, or who might have it, and who might upload it again?’”

Jess said most people don’t even realise what they’re doing is illegal.

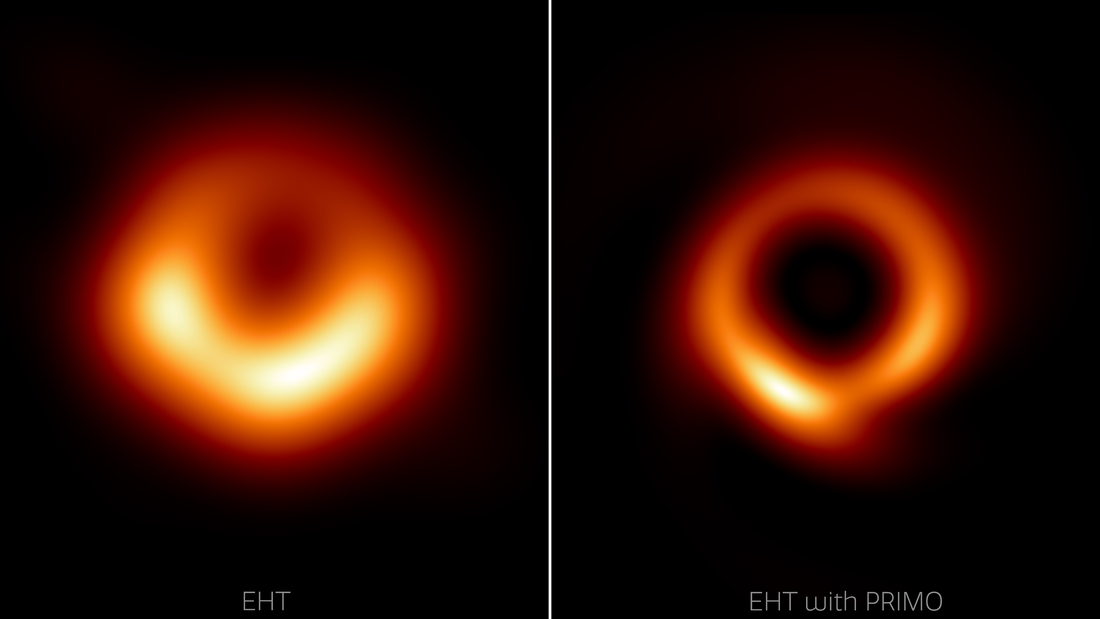

What are deepfakes?

Here’s what you need to know…

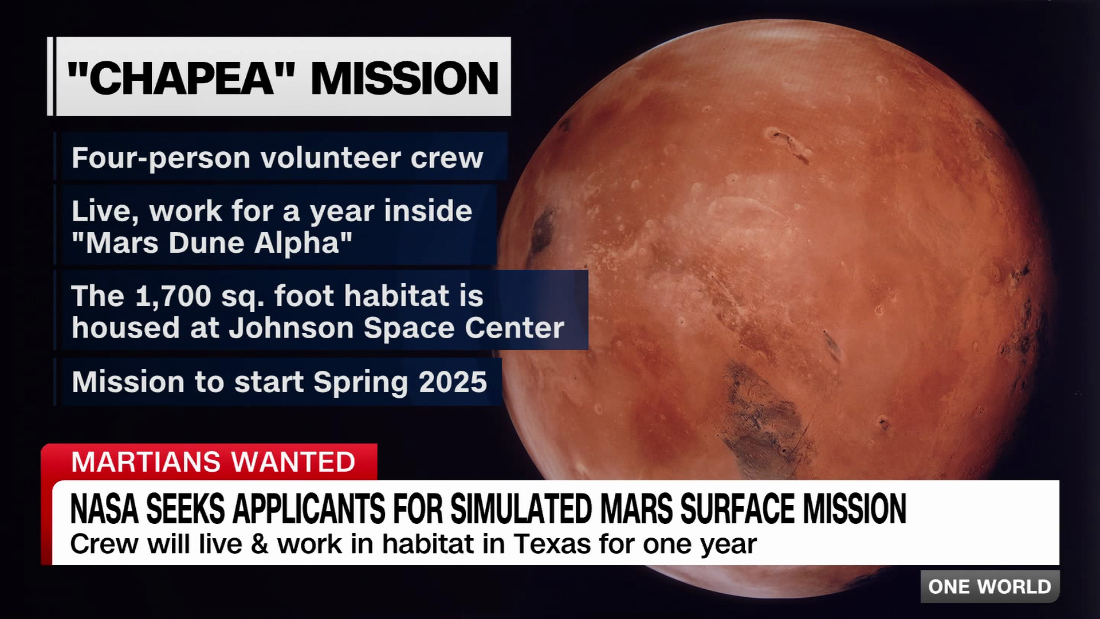

Deepfakes use artificial intelligence and machine learning to produce face-swapped videos with barely any effort

They can be used to create realistic videos that make celebrities appear as though they’re saying something they didn’t

Deepfakes have also been used by sickos to make fake porn videos that feature the faces of celebrities or ex-lovers

To create the videos, users first track down an XXX clip featuring a porn star that looks like an actress

They then feed an app with hundreds – and sometimes thousands – of photos of the victim’s face

A machine learning algorithm swaps out the faces frame-by-frame until it spits out a realistic, but fake, video

To help other users create these videos, pervs upload “facesets”, which are huge computer folders filled with a celebrity’s face that can be easily fed through the “deepfakes” app

While in recent years there has been a better grasp on what consent means in the physical world, the same can’t be said for the digital world, she explains.

Jess says: “If you’re a woman online, then you’re probably going to be sexually harassed and receive threats. You might have your images stolen. It’s like ‘Oh, well, that’s just what happens’.

“There seems to be a free-for-all when it comes to women’s bodies, specifically that they can do anything they want.”

Schoolboys are creating deepfakes of their classmates, and Jess has spoken to girls who talk about being bullied into sending nudes.

She thinks misogynistic ideology being picked up by teenagers stems from online content and masculinity influencers like Andrew Tate.

“Obviously Andrew Tate’s the loudest one out there. But there are so many of them. A lot of teenage boys think that’s funny and are reading it every single day.

APAndrew Tate is a key figure in the manosphere and has been accused of rape and sex trafficking[/caption]

Courtesy of Ben Blackall/NetflixTeen boys being radicalised through online content was recently highlighted by Netflix’s hit drama Adolescence[/caption]

ReutersKeir Starmer hosted a roundtable on adolescent safety with the creators of Netflix’s ‘Adolescence’[/caption]

“We might see a one-minute TikTok, but they’re doing hours and hours of live streams every single day into the bedrooms of these teenage boys.

“These live streams are unchecked, unregulated. They can say anything they want.”

Men like Andrew Tate are live streaming into the bedrooms of teenage boys

Teenage boys being radicalised through online content was recently highlighted by Netflix’s hit drama Adolescence.

In the fictional series, a teen boy murders a girl who rejected him.

He had asked her out when she was vulnerable after having her private photos shared at school – and he was outraged that she would dare refuse him.

And while Jess praised the production, she noted how frustrating it is that it took a fictional TV show about a man, written by men, to draw attention to the problem.

“Women have been shouting about this for many years about what’s happening and trying to draw attention.

“Every single day there are real-life stories in the news of women losing their lives at the hands of male violence, or being followed and experiencing sexual harassment and sexual assault. And that wasn’t what spurred people’s empathy.

“It’s a sad reflection of how we need men as part of the conversation.

“Because a lot of men don’t want to listen to women when we’re talking about this. So that’s why we need men to join the conversation.”

No One Wants to See Your D*ck is available to buy from today.

You’re Not Alone

EVERY 90 minutes in the UK a life is lost to suicide

It doesn’t discriminate, touching the lives of people in every corner of society – from the homeless and unemployed to builders and doctors, reality stars and footballers.

It’s the biggest killer of people under the age of 35, more deadly than cancer and car crashes.

And men are three times more likely to take their own life than women.

Yet it’s rarely spoken of, a taboo that threatens to continue its deadly rampage unless we all stop and take notice, now.

That is why The Sun launched the You’re Not Alone campaign.

The aim is that by sharing practical advice, raising awareness and breaking down the barriers people face when talking about their mental health, we can all do our bit to help save lives.

Let’s all vow to ask for help when we need it, and listen out for others… You’re Not Alone.

If you, or anyone you know, needs help dealing with mental health problems, the following organisations provide support:

CALM, www.thecalmzone.net, 0800 585 858

Heads Together,www.headstogether.org.uk

HUMEN www.wearehumen.org

Mind, www.mind.org.uk, 0300 123 3393

Papyrus, www.papyrus-uk.org, 0800 068 41 41

Samaritans,www.samaritans.org, 116 123

Published: [#item_custom_pubDate]