LOOKING out of the window of her school bus, a 13-year-old girl is distracted by the laughs of the two boys sitting in front of her as they flick through pictures on a phone.

She peers over the backs of the lads’ seats – only to recoil with horror at the source of their amusement: a photo of her walking into their school canteen, completely naked.

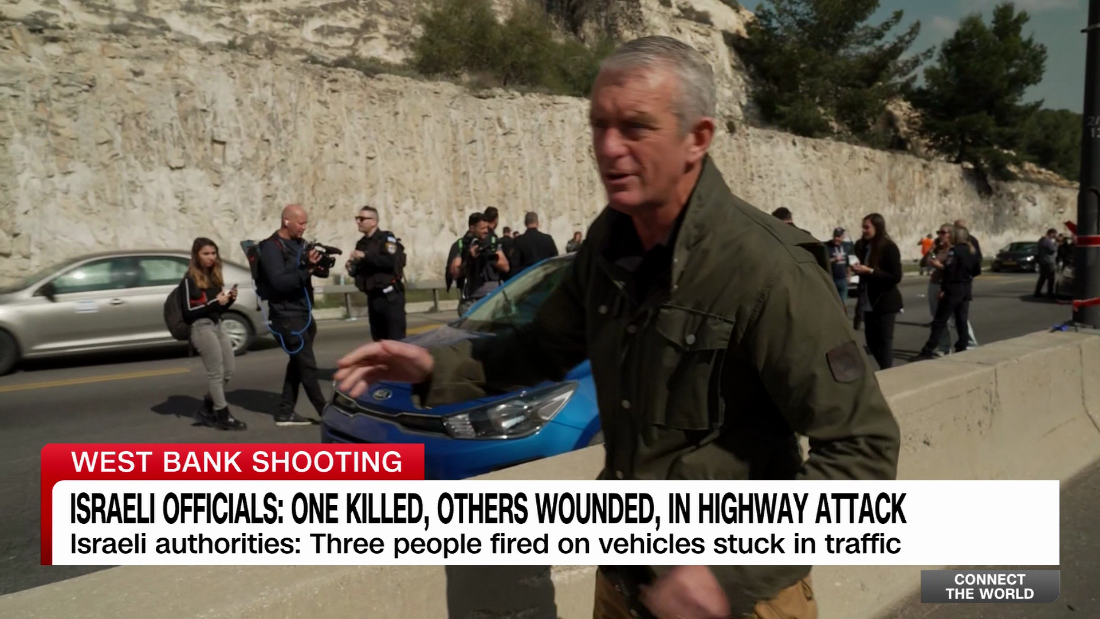

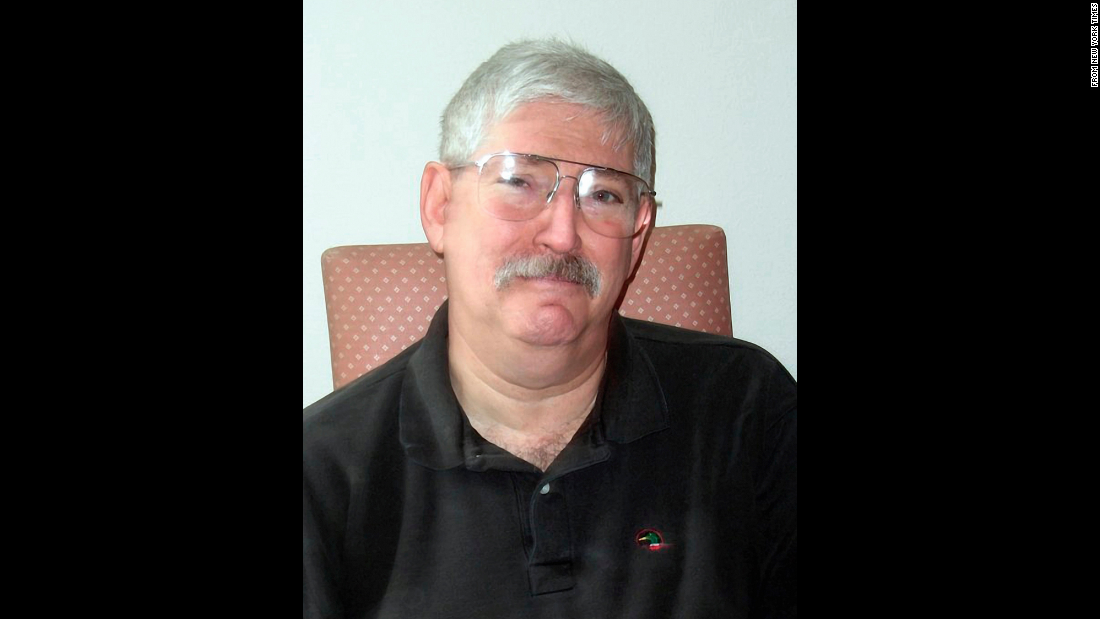

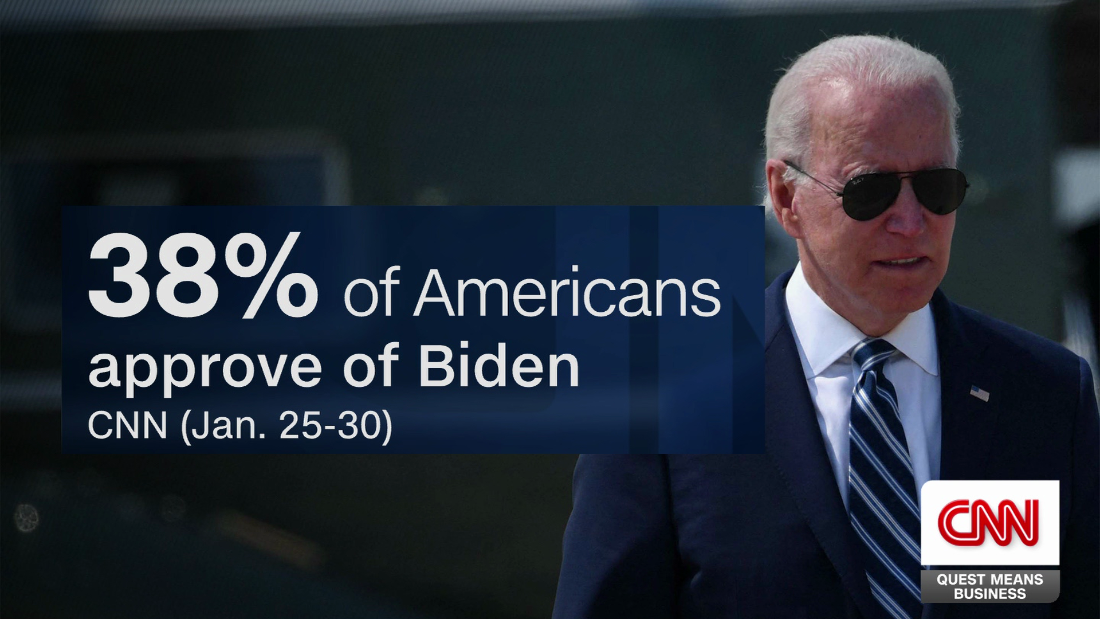

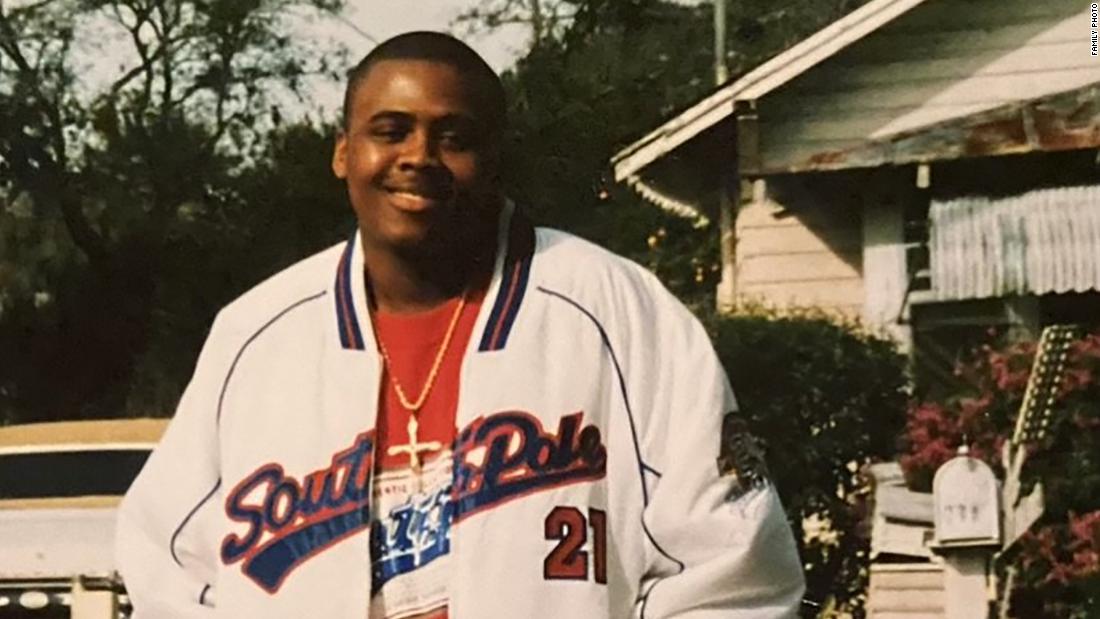

Elijah Heacock, 16, was driven to suicide when someone created a ‘deepfake’ nude picture of him

The sick apps are now being commonly used in schools

GettySchoolchildren have told of their horror after being targeted by classmates using the apps[/caption]

The image is, quite literally, the stuff of nightmares.

Yet while it looks no less real than any other photograph, it is actually a deepfake image created by a ‘nudifying’ app, which has stripped the girl’s school uniform from her body.

A Sun investigation can reveal this disturbingly realistic artificial intelligence (AI)-based technology – which is used by millions and shockingly accessible on major social media sites – is sweeping British schools, putting children at risk of bullying, blackmail, and even suicide.

While innocent youngsters are being ‘nudified’, or having their faces realistically planted onto naked bodies, teachers are being digitally ‘stripped’ by their students as crude ‘banter’.

Outside of the school playground, there are further disturbing problems, with data by the UK charity Internet Watch Foundation (IWF) revealing that reports of AI-generated child sexual abuse imagery have quadrupled in a year.

In the encrypted depths of the internet, perverts are sharing sick AI ‘paedophile manuals’, detailing how to use artificial intelligence tools to create child sexual abuse material (CSAM).

Some sickos are even creating deepfake nudes of schoolchildren to coerce them into forking over large sums of money – and for some victims, the consequences can be deadly.

This February, 16-year-old schoolboy Elijah Heacock took his own life in the US after being blackmailed for more than £2,000 over an AI-generated naked picture of himself.

“I remember seeing the picture, and I thought, ‘What is that? That’s weird. That’s like a picture of my child, but that’s not my child’,” grieving mum Shannon tells The Sun.

The photo of Elijah – a music lover who, at 14, had started volunteering to feed the homeless in Kentucky with his twin sister, Palin – was discovered on his phone after his death.

It was terrifyingly realistic – yet Shannon immediately saw signs it was fake.

“The District Attorney was like, ‘No, it’s a real photo’,” continues the mum, who had never before heard of ‘sextortion’ – where criminals blackmail a victim over sexual material.

“And we were like, ‘No, it’s not.’ The photo almost looked like somebody sitting in a cloud.”

She adds: “He had abs. Eli did not have abs – bless his heart, he thought he did.”

Millions using sick apps

As parents like Shannon have learned at a tragic cost, deepfakes are often so realistic-looking that experts can’t tell they are AI-generated.

On popular messaging apps, kids who wouldn’t dream of law-breaking on Britain’s streets are sharing fake nudes of their teen crushes – unaware it’s illegal.

British law prohibits the creation, or sharing, of indecent images of children, even if they are artificially made. Teens who do so for a ‘laugh’ face up to 10 years behind bars.

In spite of this, ‘nudifying’ apps and websites that are being accessed by millions of people every month are being advertised on social media, listed by Google, and discussed avidly on the dark web.

Our investigation found one website encouraging users to ‘undress’ celebrities – clearly, without their consent – with its ‘gem’-based prices starting at £14 per bundle.

“Our tool can undress anyone in seconds,” boasts the site.

Another, offering one free “picture undress” per day, tells users they can strip a photo of “a desired person”. And a third brags: “Let our advanced AI bring your fantasies to life.”

Nudifying apps and websites operate without consent

Users can pay to use the AI tool

Vile reviews have been left by users of one site

Reviews on such sites paint – if possible – an even more horrific image.

“I can create secret images of the woman I like,” wrote the user, in his 40s, of another site. “The sense of guilt is irresistible.”

Schools in crisis

For schools, the rise of nudifying apps has provided a near-existential challenge.

Experts warn senior staff are desperate to solve issues internally to avoid reputational damage, while teachers face career-threatening problems when fake photos of them are shared.

“The challenges of technology that nudifies photos or creates deepfake nude images is a problem most secondary schools and colleges around the country are now grappling with,” says top UK criminal defence lawyer Marcus Johnstone, who specialises in sex crime.

“I’ll bet it’s a live issue in every classroom.”

Marcus, managing director of Cheshire-based PCD Solicitors, adds that he is seeing “an ever-increasing number of children being accused of crimes because of this ‘nudify’ technology”.

“The schools don’t want this information coming out,” he claims.

“The last thing they want is to have their school in the local press having a problem with lads at the school ‘nudifying’ girls and it’s going around the school, around the internet.

“Parents of prospective children going there would go crackers.

“They’d say, ‘Well, I’m not sending my kid there’.”

Safeguarding expert Kate Flounders tells us: “The impact [of deepfake nudes] is enormous. For staff, it can be career-ending, even if the image is found to have been AI-generated.”

Calls for crackdown

In April, Dame Rachel de Souza, the Children’s Commissioner for England, called on the UK Government to ban apps that use AI to produce sexually explicit images of children.

Her comments came as the IWF’s analysts, who have special permission to hunt down and remove repulsive CSAM online, confirmed 245 reports in 2024 – a staggering 380 per cent increase on 2023.

Schoolchildren now fear that “anyone” could “use a smartphone as a way of manipulating them by creating a naked image using these bespoke apps,” said Dame Rachel.

British teens who have fallen victim to such technology have been calling the NSPCC’s Childline counselling service, with one girl revealing she has been left with severe anxiety.

The 14-year-old said boys at her school had created “fake pornography” of her and other girls.

They’d then sent the explicit content to “loads” of group chats.

“They were excluded for a bit, and we had a big assembly about why it was wrong, but after that the school told us to forget what happened,” the traumatised girl told Childline.

“I can’t forget, though.

“People think that they saw me naked, and I have to see these boys every day.”

Our children are in a war that we’re not invited to

Elijah’s mum Shannon

Nearly six months on from Elijah’s death, Shannon, a cheerleading coach, is struggling to deal with the loss of her beloved son, who is believed to have been targeted in a ‘sextortion’ scam by a man in Nigeria.

“We’re not doing very well right now,” she admits, adding jokingly: “Elijah was an amazing brother who drove everyone insane.”

She continues: “He was our tornado. Our house is so quiet and it’s sad.”

Shannon is calling for parents to chat to their kids about AI technology, with many mums and dads clueless about the explicit apps infiltrating their children’s classrooms.

“Talk to your kids, and read about it,” she urges. “Our children are in a war that we’re not invited to.”

Elijah Heacock with twin sister Palin, mother Shannon and father John Burnett

The teen is believed to have been targeted in a ‘sextortion’ scam by a man in Nigeria

Sinister creeps

Kate, CEO of the Safeguarding Association, has encountered UK-based cases where the images of schoolchildren and teachers were altered using “freely available” apps.

“The issue is, once the image is out there, it is nigh on impossible to get it off,” she warns.

“I am aware of one case where a female student was subject to this, managed to have the image removed, only for it to resurface several years later when she was in college.

“The trauma was enormous for her.”

Elijah had started volunteering to feed the homeless in Kentucky with his sister Palin

His devastated family are now fighting to raise awareness of ‘sextortion’

Of course, some children, and adults, create such content for more sinister reasons.

‘Nudifying’ services – many, with brazen terms like “porn”, “undress”, “X” and “AI” in their names – have been promoted in thousands of adverts on leading social media platforms.

“These apps are far too easy to access and exploit,” says Rani Govender, Policy Manager for Child Online Safety at the NSPCC, which also wants such apps to be banned.

In June, Meta – the tech giant behind Facebook, Instagram and WhatsApp – announced it was suing the maker of CrushAI, an app that can create sexually explicit deepfakes.

How sick ‘nudifying’ apps work

THE technology behind ‘nudifying’ apps – used by children across Britain – is trained on “massive datasets of real explicit imagery”, explains AI consulting expert Wyatt Mayham.

“These ‘nudifying’ apps primarily use generative AI models like GANs (Generative Adversarial Networks) or newer, more sophisticated diffusion models,” he tells The Sun.

“The AI learns the patterns and textures of the human body, allowing it to ‘inpaint’ (fill in) or ‘outpaint’ (extend) a provided image, effectively stripping the clothing from a photo of a fully-clothed person and generating a realistic nude depiction.”

Referring to the rise in AI-generated CSAM among UK schoolchildren, Wyatt, CEO of Northwest AI Consulting, adds: “The danger goes far beyond a ‘prank’.

“This is a new form of scalable, psychological abuse.

“For perpetrators, it’s a low-risk, high-impact weapon for bullying, revenge, and control.

“More sinisterly, it’s a powerful tool for ‘sextortion’.

“A perpetrator can generate a realistic fake nude of a victim and then use it as leverage to extort money or, more commonly, to coerce the victim into providing real explicit images.”

Jurgita Lapienytė, Editor-in-Chief at Cybernews, warns that AI tools are “advancing quickly”.

She tells us: “Most of the apps are hard to stop because they use anonymous hosting and payments, often outside the UK.

“Social media giants and tech companies are not moving fast enough to block or report these tools, and current content monitoring often fails to catch them before damage is done.”

Meta alleged the firm had attempted to “circumvent Meta’s ad review process and continue placing” adverts for CrushAI after they were repeatedly removed for violating its rules.

The giant added it was taking further steps to “clamp down” on ‘nudifying’ apps, including creating new detection technology and sharing information with other tech firms.

But experts warn that adverts for such apps – often hosted anonymously and offshore – will only continue to pop up on a plethora of social networks as technology outpaces the law.

Many of these adverts disguise their offerings as “harmless” photo editor apps.

The issue is, once the image is out there, it is nigh on impossible to get it off

Kate FloundersCEO, Safeguarding Association

Others, however, are more forthcoming.

Our investigation found a sponsored advert, launched on Meta’s platforms a day earlier, for an AI-based photo app that boasted: “Undress reality… AI has never been this naughty.”

Meta has since removed the ad.

And on Google, we were able to access ‘nudifying’ tools at the click of a button. One search alone, made from a UK address, brought up two of these tools on the first page of results.

GettySchool are worried about the bad publicity these apps could cause for them[/caption]

Former FBI cyberspy-hunter Eric O’Neill tells us: “AI-generated explicit content is widely traded on the dark web, but the real threat has moved into the light.

“These ‘nudify’ apps are being advertised on mainstream platforms – right where kids are.

“Today’s teens don’t need to navigate the dark web.

“With a few taps on their phone, they can generate and share explicit deepfakes instantly.”

People think that they saw me naked, and I have to see these boys every day

Schoolgirl, 14

The process – which can destroy victims’ lives – takes “seconds”, says Eric, now a cybersecurity expert and author of the upcoming book, Spies, Lies, and Cybercrime.

He continues: “A single photo – say, from a school yearbook or social post – can be fed into one of dozens of freely available apps to produce a hyper-realistic explicit image.”

Legal loophole

Although most ‘nudifying’ tools contain disclaimers or warnings prohibiting their misuse, experts say these do little to prevent users from acting maliciously.

Deepfake nudes shared online among teens are at risk of being sold on the dark web – where predators prowl chat forums for “AI lovelies” and “child girlies”.

A report published last year by UK-based Anglia Ruskin University’s International Policing and Public Protection Research Institute (IPPPRI) reveals the horrors of such forums.

One user chillingly wrote: “My aim is to create a schoolgirl set where she slowly strips.”

How to get help

EVERY 90 minutes in the UK a life is lost to suicide

It doesn’t discriminate, touching the lives of people in every corner of society – from the homeless and unemployed to builders and doctors, reality stars and footballers.

It’s the biggest killer of people under the age of 35, more deadly than cancer and car crashes.

And men are three times more likely to take their own life than women.

Yet it’s rarely spoken of, a taboo that threatens to continue its deadly rampage unless we all stop and take notice, now.

If you, or anyone you know, needs help dealing with mental health problems, the following organisations provide support:

CALM, www.thecalmzone.net, 0800 585 858

Heads Together,www.headstogether.org.uk

HUMEN www.wearehumen.org

Mind, www.mind.org.uk, 0300 123 3393

Papyrus, www.papyrus-uk.org, 0800 068 41 41

Samaritans,www.samaritans.org, 116 123

Another dreamed of a “paedo version of Sims combined with real AI conversational and interactive capabilities”, while others called the vile creators of AI-generated CSAM “artists”.

Shockingly, perverts can now even digitally ‘stitch’ children’s faces onto existing video content – including real footage of youngsters being previously sexually abused.

In many cases, girls are the target.

“At the NSPCC, we know that girls are disproportionately targeted, reflecting a wider culture of misogyny – on and offline – that must urgently be tackled,” says Rani.

“Young girls are reaching out to Childline in distress after seeing AI-generated sexual abuse images created in their likeness, and the emotional impact can be devastating.”

‘Digital assault’

Earlier this year, the UK Government announced plans to criminalise the creation – not just the sharing – of sexually explicit deepfakes, which experts have praised as a “critical step”.

The change in law will apply to images of adults, with child imagery already covered.

The Government will also create new offences for taking intimate images without consent, and the installation of equipment with the intent to commit these offences.

A Google spokesperson told The Sun: “While search engines provide access to the open web, we’ve launched and continue to develop ranking protections that limit the visibility of harmful, non-consensual explicit content.

“Specifically, these systems restrict the reach of abhorrent material like CSAM and content that exploits minors, and are effective against synthetic CSAM imagery as well.”

Meta said it has strict rules against content depicting nudity or sexual activity – even if AI-generated – with users able to report violations of their privacy in imagery or videos.

It also does not allow the promotion of ‘nudify’ services.

Childline’s Report Remove service allows young people aged under 18 to speak to a professional and confidentially report sexual images and videos of themselves. Through the service, the Internet Watch Foundation (IWF) and Childline can help to get these images removed and prevent them from being shared in the future.

Published: [#item_custom_pubDate]