WANNABE jihadists could soon be coached to carry out atrocities by killer chatbots, Britain’s terrorism tsar has warned.

Extremists may exploit artificial intelligence to plan attacks, spread propaganda, and dodge detection with the help of persuasive bots, according to Jonathan Hall KC.

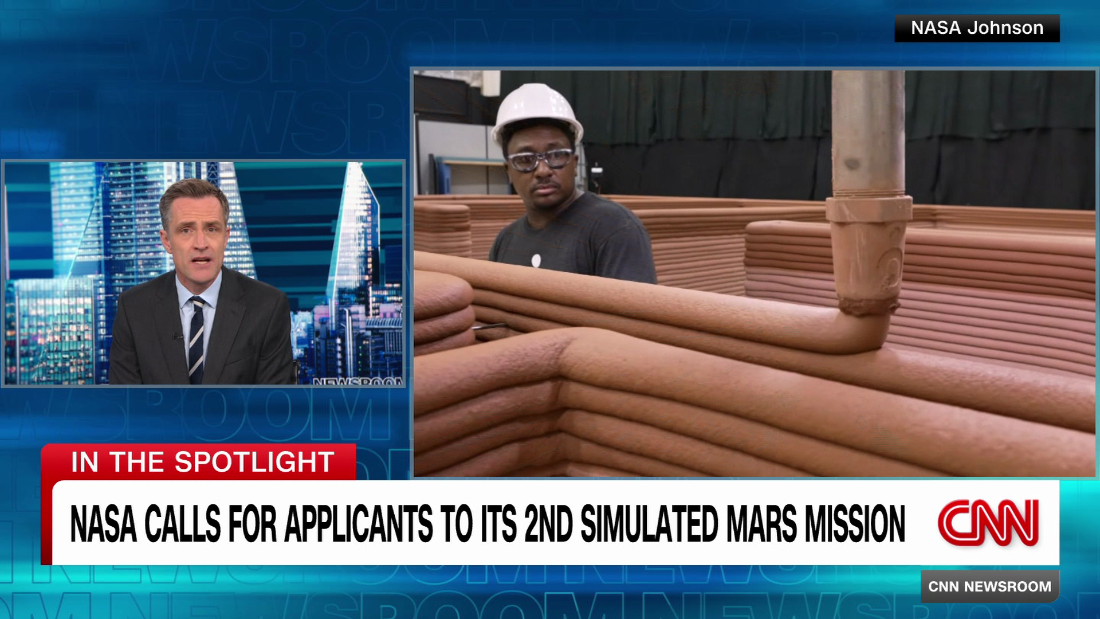

PAMr Hall said AI tools could make terrorist content faster and more powerful[/caption]

In his official 2023 annual report on terrorism, he said: “Chatbot radicalisation is the most difficult problem.”

Mr Hall warned some terrorist-themed bots are already being shared online, saying:“Terrorist chatbots are available off the shelf, presented as fun and satirical models but as I found, willing to promote terrorism.”

He said much depends on what users ask the AI, explaining: “Chatbots pander to biases and are eager to please and an Osama Bin Laden will provide you a recipe for lemon sponge if you ask.”

He added: “Even where a chatbot has had its restraining features (guardrails) removed, or is positively trained to be sympathetic to terrorist narratives, the output overwhelmingly depends on whether the bot is asked about cake recipes or murder.”

He listed seven possible terrorism risks from generative AI, including attack planning, propaganda, evading online moderation, deepfake impersonation and identity guessing.

Mr Hall said AI tools could make terrorist content faster and more powerful, arguing “generative AI’s ability to create text, images and sounds will be exploited by terrorists”.

He recommended the Government “consider a new race-hatred based offence” to deal with potential cases that fall between terrorism and hate crime.

But he warned against jumping to legislate too early: “The absence of Gen AI-enabled attacks could suggest the whole issue is overblown.”

He said there was only one known case of a chatbot engaging in a conversation about planning an attack.

Jaswant Singh Chail took a crossbow into the grounds of Windsor Castle, intending to kill Elizabeth II, in 2021.

It came after communication with a chatbot which sanctioned his attempt and he was jailed for nine years.

Mr Hall’s report also warned that online radicalisation remains a live threat on existing platforms.

He highlighted the case of Mohammad Sohail Farooq, who attempted a bomb attack on St James’s Hospital in Leeds in January 2023.

The report stated this was “a close-run thing” and that “the facts suggest self-radicalisation via the internet (TikTok), personal grievances together with ideological and religious motives, a rapid escalation, and a near miss.”

He argued that children and young people remain vulnerable to extremist content online.

Mr Hall wrote that they are “being seduced by online content into sharing material, expressing views, and forming intentions that can result in risk.”

Published: [#item_custom_pubDate]